Keeping Cool

By Hugh Ashton

Data centers—the storage points of the information and technology that underpin our corporate lives—use massive amounts of energy. In turn an equally large amount of energy is spent on cooling them down and this has led designers to contemplate more environmentally friendly and less costly solutions creating specifically designed technology.

Bucket with a hole

Like all electrical appliances, computer equipment is inherently inefficient, meaning that the energy used by the unit is not all turned to good use. Much of it is wasted as heat, generated by ever-faster processors, crammed into ever-smaller physical configurations (“blade servers”), and stacked in racks. This heat comes from not only the IT equipment, but the associated power conditioning and backup equipment (data center managers have no wish to see thousands of servers die as the result of power spikes or outages). As a result, data centers are air-conditioned and cooled, equipped with complex chilled water systems and Computer Room Air Conditioners (CRACs).

However, it is an astonishing fact that in the majority of data centers, the heat generated by the cooling system is equal to that produced by the IT equipment itself. This is why the use of energy in most data centers is as efficient as a bucket with a hole in.

Moreover, with several percent of a developed country’s energy budget devoted to IT, it is obvious that any energy savings made on the environmental side of data centers will produce nationally noticeable energy savings, and thereby significantly help reduce carbon emissions. Mark Thomas, a consultant who has managed many data center construction projects in Tokyo, adds, “Companies may not be very interested in the ‘green’ aspects of energy saving, but they do like the bottom line of reduced running costs.” He contends that a gap in understanding between the IT and the engineering sides often contributes to high data center operational costs. “Every degree of cooling costs millions [of yen], and the IT side tends to over-specify the cooling requirements. 19°C as a room temperature is pretty ridiculous—you can quite easily go up to 22°C without damage to present-day equipment, which typically draws much less current, and therefore generates less heat than stated by the label on the back panel. You shouldn’t need a fleece [jacket] every time you go into the machine room.”

But Thomas also makes the point that cooling requirements are not a constant. Different racks in a data center hold equipment producing different amounts of heat, and even the way that the equipment within a rack is organized can affect the heat. Not only do the temperatures between racks and within a rack vary, points out Fumio Takei of Fujitsu Laboratories, but the temperature changes from day to day as the workload changes. For example, a securities brokerage will put double the amount of strain on servers during trading hours than during non-trading hours.

Monitoring inside the rack can help optimize the cooling requirements by altering the volume of cooling air as required, particularly if the system has been intelligently designed in the first place. But how to monitor? Networked monitoring thermometers integrated into racks have been available for some time now, allowing accurate and up-to-the-second data feeds. However, as Takei points out, these thermocouples and their associated networking actually consume electricity as well, offsetting their utility somewhat.

Taking the pulse

Fujitsu Laboratories has refined a technique to allow instant accurate temperature measurements throughout a data center from using just one monitoring device

Fujitsu Laboratories has refined a technique to allow instant accurate temperature measurements throughout a data center from using just one monitoring device. They do this by sending laser pulses along a single optical fiber threaded through the racks and under the machine room floor, making it possible to determine the temperature of any particular part of the fiber.

The system uses a phenomenon known as Raman scattering (first described by the physicist Chandrasekhara Venkata Raman), which basically allows temperatures to be measured relatively easily through examination of the reflected laser pulses and its distortion. Nanosecond timing of the sampling process allows the location of the sampled temperature to be determined to within a meter accuracy, and to a degree or two, over a fiber length of several kilometers.

Fujitsu Laboratories is pioneering new data center cooling technology

Fujitsu Laboratories is pioneering new data center cooling technology

A simpler application of this principle has been in operation for some time to monitor tunnels in case a fire breaks out, to find out the best end to dispatch firefighters. But the version as refined by Fujitsu Laboratories adds an extra layer of sophistication— a specially developed special signal process applied to the raw data of the reflected pulses presents a much more accurate overall picture of the fiber over its entire length. Though currently in the laboratory stage, this monitoring method may well find its way into selected operational sites in a few years, and is likely to become a fully-fledged commercial product within five years.

Takei and his team point out a number of advantages of this system over solutions using networked “intelligent” sensing racks. The fiber is relatively cheap, and no modifications are needed to existing racks. The central laser and monitoring unit is not very expensive compared to other solutions, and is power and network efficient compared to the old method of using decentralized rack based monitoring equipment.

Going underground

However, both Thomas and Takei make the point that monitoring of one variable is not enough, and an integrated approach is needed, combining the “in-box” (motherboard, etc.), “in-rack” and “in-room” measurements to produce an overall picture of data center health. Furthermore, this “big picture” then needs to be fed into a flexible and responsive environmental control system (preferably on a per-rack basis) to optimize the efficiency of the cooling process. At the moment, there is no fully integrated solution on the market but the problem is by no means insoluble. Currently available individually controlled fluid-cooled racks are expensive, but Thomas feels that this represents a possible way forward as prices drop. However, these are repellent to many IT managers who typically regard liquid and IT equipment as totally incompatible.

Under the earth: the future location for data centers?

Under the earth: the future location for data centers?

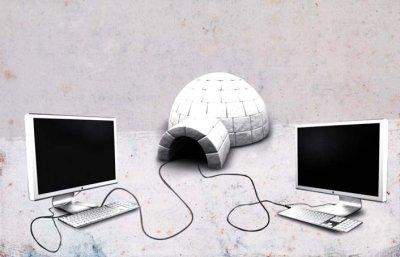

Sidestepping this issue entirely, a consortium of 11 information technology firms, headed by Sun Microsystems here in Japan, is working on a radical alternative involving the construction of a large data center 100 meters underground, using existing tunnel space such as disused mines, modified for this purpose. These deep underground “rooms” remain at a constant 15°C, and naturally chilled groundwater can be used to substitute for the expensively-cooled water used in data centers now.

Multinational organizations such as Citigroup, for whom a data-center collapsing could be a catastrophe, spend vast amounts of money on data center disaster prevention, locating emergency back-up systems in remote locations such as Okinawa. With the threat of earthquakes being a constant worry, underground bunkers could be one way of managing risk. Although still unconfirmed, there is hope that subterranean areas are so deep beneath the surface that earthquakes, the vast majority of which only destroy shallow areas, will not collapse the bunker itself, even if the entrance shaft is more vulnerable. The US government already uses underground centers to protect its systems and Sun Microsystems et al are hoping that the Japanese government will consider using these low-security risk bases too.

With the threat of earthquakes being a constant worry, underground bunkers could be one way of managing risk

But it’s not only low-risk—these data centers could prove incredibly cost effective as well. Reported projections indicate that 50% less power will be used compared to traditional data centers, potentially equating to over ¥1 billion savings in power costs annually using this method, if the center were to house 30,000 servers and associated IT equipment. Of course, such data centers are not easy to implement— large abandoned holes in the ground are not exactly common, and typically are a long way from population centers. However, Japan’s advanced telecommunications infrastructure can compensate for the geographical location, and for large scale projects, this approach, or one similar to it, may well be worth investigation for major projects.

For data centers which are not big enough to make it worthwhile going underground, solutions such as that being developed by Fujitsu Laboratories, allied to intelligent planning as advocated by Thomas, will undoubtedly help to isolate and reduce energy waste in this often overlooked area of corporate life. JI