Heavy Weather

Back to Contents of Issue: November 2003

|

|

|

|

by Tim Hornyak |

|

|

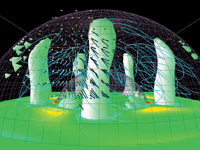

This is Kageyama's conception of the engine behind our planet's magnetic fields. He thumbs a button and another particle comes into being, tracing helical convection currents through the mantle as the perspective soars to a point several kilometers above the North Pole. Suddenly he flicks a laser wand and the mesh sphere disappears. The virtual reality chamber's walls go completely blank.

"The human eye is a nice tool to extract information from images," Kageyama notes as he removes his goggles. "We use this facility to visualize the 3D structure hidden in a sea of numerical data. By following the virtual particle's motion, we can visualize or understand fluid motion. It's very complicated, but we can extract some organization."

Kageyama is trying to understand the subterranean forces that make compasses point north, cause auroras to appear near the poles and ultimately shield our atmosphere from decimation by the solar wind.

Exactly how the magnetosphere is produced is a mystery being unraveled by scientists like Kageyama, a physicist at the Japan Marine Science and Technology Center (JAMSTEC). One of the reasons Kageyama and others like him are ahead of the game is because they work on the most advanced computer in the world.

Power tool

Outside the fence surrounding the government-run Earth Simulator Center, you can hear the air conditioners humming around the massive hangar as the JPY40 billion NEC-built behemoth begins its work. Its primary mission is to render our world in digital form so that scientists can better understand environmental changes and processes, from the magnetosphere's origins to tectonic motion to sea temperature fluctuations.

"The primary objective is to make reliable prediction data for Earth's environmental changes, such as global warming and earthquake dynamics," says Tetsuya Sato, director general of the ESC, which is under JAMSTEC. "Already, global ocean circulation simulations have confirmed that mesoscale phenomena such as typhoons and rain fronts can be nicely reproduced."

It's been just over a year since actual research projects using the Earth Simulator were started in September 2002, with roughly 40 studies. While it's still too early for definitive conclusions from such complex analyses, the results so far are promising.

Takahashi is doing unprecedented weather simulations with a "coupled" ocean-atmosphere model that incorporates the creation and depletion of sea ice to understand the mechanisms of climate change and what role the periodic El Nino effect plays in the process. The work is especially timely amid the high number of extreme weather incidents around the world this year, from tornadoes in the US in May that killed 41, to the summer heat wave in France that is believed to have caused a staggering 15,000 deaths.

Such events have led the UN World Meteorological Organization, which usually occupies itself with compiling statistics, to issue a warning that hazardous weather incidents could continue to increase in number and intensity, squarely blaming global warming and climate change. It added that land temperatures for May 2003 were the warmest on record.

"If we continue with similar lifestyles, we may see more severe conditions," Takahashi says, noting that the task of accurately unraveling the relationships between local weather conditions, climate and global warming is an extremely complex task and would require a next-generation Earth Simulator.

For now, she is preparing for the future by turning to the past to shed light on the present: Another focus of her work is the world's paleoclimatic conditions tens of thousands of years ago. Observational data on the chemical makeup of corals, ice cores and marine sediments from cold and warm eras can provide a picture of the weather conditions early humans faced, and can contribute to our understanding of modern predicaments.

Of catfish and magic stones

"The Earth Simulator is a bit small for that, but by using it we'll be able to make preparations and some progress toward predicting earthquakes," says Sato. "Also: we'll be able to know whether true prediction is impossible by simulation or scientific efforts."

Despite inquiries into their electrosensory abilities, which they use to catch prey in murky water, studies with catfish in labs throughout the country have produced no firm conclusions about tremor timing.

Historical records dating to the 7th century indicate that thrust faulting of the Philippine Plate has caused earthquakes along the Nankai Trough off Shikoku every 90 to 140 years, causing more than 2,500 casualties in 1946 and 1994. Some experts have forecast several tremors of magnitude 8 or higher in the area between 2020 and 2050. Meanwhile, Furumura has used the supercomputer to estimate the potential effects of the next quake in terms of the seven-point Japanese seismic intensity scale.

The machine can also be used in fields outside earth science. For instance, scientists are using its tremendous computing power to study the conductivity and strength properties of nanocarbon tubes, microscopic tubular structures a billionth of a meter in diameter that could be 30 to 100 times tougher than steel while only one-sixth its weight. Potential applications include electronics with a much higher transistor density, atomic-scale mechanisms, new aerospace materials and new energy storage devices.

Hello, Computenik

Linking them is 2,400 km of cable, enough to join Hokkaido to Okinawa, and surging through it all (including a lighting system) is roughly 7 million watts of electricity per year at a cost of $7 million.

The water-cooled vector supercomputer based on NEC SX technology has a theoretical top speed of 40 teraflops per second, or 40 trillion floating point operations, and a main memory of 10 terabytes, which is about 10 trillion bytes. Visitors might expect to be greeted by the silky voice of the HAL 9000 from 2001: A Space Odyssey. But the sealed chamber, where the blue-green cabinets are arrayed in a circular pattern that evokes the Earth itself, is quiet save for the endless drone of air conditioners.

Meuer and his colleagues publish their rankings twice a year. The Earth Simulator remained tops in the latest edition, with a certified speed of 35.86 teraflops, far ahead of second place Hewlett-Packard's ASCI Q, which clocked in at 13.88. Despite fierce competition in the small but strategically important $5 billion supercomputer market, Meuer says he expects the NEC instrument to retain its lead until June 2005, when IBM's ASCI Purple, a 100-teraflop machine commissioned by the US Department of Energy for nuclear weapons testing, will enter the scene.

In April 1997, the project received funding from the government, and Miyoshi spent many late nights over the next five years overseeing design and construction, which began in March 2000. The initial design, based on the processor technology of the time, called for a machine the size of a baseball stadium. But his dream became reality when the Earth Simulator was turned on two years later, boasting the key development of a highly efficient transfer rate between switches and a low delay time in data transfer.

Miyoshi died four months before the Earth Simulator was completed. In June 2002, it was ranked the fastest machine in the world.

"The arrival of the Japanese supercomputer evokes the type of alarm raised by the Soviet Union's Sputnik satellite in 1957," says Top500 coauthor Jack Dongarra of the University of Tennessee. "In some sense, we have a Computenik on our hands."

US manufacturers have been focusing on producing machines with lots of cheaper components in a reflection of the rising popularity of smaller, cost-effective computing with off-the-shelf chips led by the PC market.

Grid computing, for example, lets users access machines on the Internet or through private networks so that programs can solve complex problems by farming out small pieces of a task to a large number of machines. The SETI@home project attempts to sift through radio telescope monitoring data for signs of extraterrestrial life by harnessing the power of individual computers owned by over 4 million volunteers.

"It is very important for our mission to ensure that the Earth Simulator is used only for scientific or peaceful purposes," Kiyoshi Otsuka, a JAMSTEC official who worked at the ESC's Research Exchange Group said of visiting scholars. "We must check their research carefully."

The algorithm of nature

"The dominance of the Earth Simulator will continue for six or seven years in terms of practical performance," says Sato, who has already started lobbying Tokyo to fund the construction of a more powerful successor in seven years with the same budget as the first, a general-purpose "holistic simulator" that would be faster by a factor of 10 to the power of 3 or 4, and geared at tackling the two fronts of micro and macro phenomena, from protein structures to predicting climate change and, perhaps, earthquakes.

"The next-generation computer should be based on the algorithm of nature," Sato adds, "by adapting natural structure into the architecture of the Earth Simulator itself."

"In order to change humans' way of thinking, we need bigger general-purpose supercomputers," he says. "I mean something that can cover all of nature, from the microscopic to the macroscopic. We need the tools to deal with entire systems." He pauses thoughtfully. "Otherwise, we cannot change real lives." @

|

|

Note: The function "email this page" is currently not supported for this page.

AKIRA KAGEYAMA IS STANDING at the center of the Earth. Around him, particles of light cascade, aquamarine vortices churn and arrows flow like schools of fish in a slow dance.

AKIRA KAGEYAMA IS STANDING at the center of the Earth. Around him, particles of light cascade, aquamarine vortices churn and arrows flow like schools of fish in a slow dance.

Tucked away amid moldering tatami shops and condominiums in a humdrum industrial and residential corner of Yokohama, the Earth Simulator is housed in a specially constructed building, taking up three floors and an area equivalent to four tennis courts. It became operational in March 2002 as the world's fastest supercomputer, so quick that its raw processing power equaled that of the 20 speediest US computers combined, far outpacing the previous title holder, the IBM-built ASCI White at Lawrence Livermore National Laboratory, which is used for nuclear weapons simulations.

Tucked away amid moldering tatami shops and condominiums in a humdrum industrial and residential corner of Yokohama, the Earth Simulator is housed in a specially constructed building, taking up three floors and an area equivalent to four tennis courts. It became operational in March 2002 as the world's fastest supercomputer, so quick that its raw processing power equaled that of the 20 speediest US computers combined, far outpacing the previous title holder, the IBM-built ASCI White at Lawrence Livermore National Laboratory, which is used for nuclear weapons simulations.

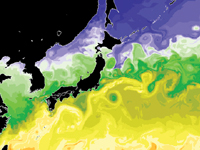

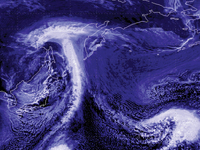

"We have already demonstrated that typhoons can be reproduced in global atmospheric circulation programs," notes Sato. Conventional simulators have a resolution of 100 km at most, but the Earth Simulator tightens the mesh to 10 km, providing an unprecedented level of detail. Meteorologists can now view the complex structures and origins of the whip-like Kuroshio Current, a major warm water current flowing northeasterly along the Pacific coasts of Taiwan and Japan and moderating weather in the two countries by conveying heat and moisture from the south to higher latitudes. They can also pull back thousands of meters above the sea and study simulated births of cyclones off Madagascar that are both higher resolution and more realistic than satellite photos.

"We have already demonstrated that typhoons can be reproduced in global atmospheric circulation programs," notes Sato. Conventional simulators have a resolution of 100 km at most, but the Earth Simulator tightens the mesh to 10 km, providing an unprecedented level of detail. Meteorologists can now view the complex structures and origins of the whip-like Kuroshio Current, a major warm water current flowing northeasterly along the Pacific coasts of Taiwan and Japan and moderating weather in the two countries by conveying heat and moisture from the south to higher latitudes. They can also pull back thousands of meters above the sea and study simulated births of cyclones off Madagascar that are both higher resolution and more realistic than satellite photos.

The big picture

The big picture

Eighty years after the magnitude 7.9 Great Kanto Earthquake that killed 142,000 in the Tokyo region, other researchers at the ESC are also feeding past records into the Earth Simulator. Mining the wealth of Japanese observational data on quakes, researchers run numbers through an analysis similar to computerized tomography, used in medical scans to see inside the brain or other parts of the body. This enables them to deduce hidden crustal forms and to predict how seismic waves are likely to travel. Mantle convection and plate movement simulations are also possible and when simulated together may lead to actually predicting when earthquakes could strike and what damage they might inflict. That would be invaluable for Japan, which lies in a zone of acute crustal instability and is one of the most quake-prone regions in the world, with an average 1,500 temblors a year.

Eighty years after the magnitude 7.9 Great Kanto Earthquake that killed 142,000 in the Tokyo region, other researchers at the ESC are also feeding past records into the Earth Simulator. Mining the wealth of Japanese observational data on quakes, researchers run numbers through an analysis similar to computerized tomography, used in medical scans to see inside the brain or other parts of the body. This enables them to deduce hidden crustal forms and to predict how seismic waves are likely to travel. Mantle convection and plate movement simulations are also possible and when simulated together may lead to actually predicting when earthquakes could strike and what damage they might inflict. That would be invaluable for Japan, which lies in a zone of acute crustal instability and is one of the most quake-prone regions in the world, with an average 1,500 temblors a year.

Japan leads the world in earthquake studies, and the Earth Simulator may bring the seemingly impossible goal of accurate prediction a step closer. That would help enormously in a field where many researchers are still studying catfish, which -- according to legend -- behave violently before the ground shakes. (Indeed, an ancient Japanese myth has a giant catfish called Namazu keeping the archipelago afloat. Whenever it flips, earthquakes occur, and its thrashing is only kept in check by the protective deity Kashima, who controls the reviled troublemaker with a magic stone.)

Japan leads the world in earthquake studies, and the Earth Simulator may bring the seemingly impossible goal of accurate prediction a step closer. That would help enormously in a field where many researchers are still studying catfish, which -- according to legend -- behave violently before the ground shakes. (Indeed, an ancient Japanese myth has a giant catfish called Namazu keeping the archipelago afloat. Whenever it flips, earthquakes occur, and its thrashing is only kept in check by the protective deity Kashima, who controls the reviled troublemaker with a magic stone.)

Scientists like Takashi Furumura of the University of Tokyo's Earthquake Research Institute are paying more attention to an array of over 1,600 ground motion detectors recently deployed throughout Japan at intervals of 10 to 20 km. Readings obtained by 521 detectors during the magnitude 7.32 quake of October 2000, which struck Tottori Prefecture and injured more than 130 people, were used by Furumura to produce an Earth Simulator seismic wave propagation program, incorporating a subsurface model of western Japan. The 3D simulation, depicted as a multicolored wave rippling westward across the archipelago to Kyushu and Osaka, matches observations well, and the machine allowed for new movement detail in specific terrain such as sedimentary basins.

Scientists like Takashi Furumura of the University of Tokyo's Earthquake Research Institute are paying more attention to an array of over 1,600 ground motion detectors recently deployed throughout Japan at intervals of 10 to 20 km. Readings obtained by 521 detectors during the magnitude 7.32 quake of October 2000, which struck Tottori Prefecture and injured more than 130 people, were used by Furumura to produce an Earth Simulator seismic wave propagation program, incorporating a subsurface model of western Japan. The 3D simulation, depicted as a multicolored wave rippling westward across the archipelago to Kyushu and Osaka, matches observations well, and the machine allowed for new movement detail in specific terrain such as sedimentary basins.

The Earth Simulator is at best a practical tool to pursue grand scientific goals -- but it is also a very impressive piece of machinery. The observation deck in its special quake-resistant hangar, which can weather a 7.5 temblor and is shielded from external electromagnetic waves and lightning, offers a view of what looks like an immense locker room: 320 2-meter-high blue cabinets, each containing two processor nodes, and 65 green interconnected network cabinets that coordinate computing tasks among 5,120 processors.

The Earth Simulator is at best a practical tool to pursue grand scientific goals -- but it is also a very impressive piece of machinery. The observation deck in its special quake-resistant hangar, which can weather a 7.5 temblor and is shielded from external electromagnetic waves and lightning, offers a view of what looks like an immense locker room: 320 2-meter-high blue cabinets, each containing two processor nodes, and 65 green interconnected network cabinets that coordinate computing tasks among 5,120 processors.

"The Earth Simulator is a quantum leap in the development of supercomputers," says Hans Meuer, a University of Mannheim computer science and math professor who ranks the fastest computers. "Since mid-2002, it has been the No. 1 supercomputer in the world. We, the authors of the Top500 supercomputer list, have never seen before such a performance gain over the previous No. 1."

"The Earth Simulator is a quantum leap in the development of supercomputers," says Hans Meuer, a University of Mannheim computer science and math professor who ranks the fastest computers. "Since mid-2002, it has been the No. 1 supercomputer in the world. We, the authors of the Top500 supercomputer list, have never seen before such a performance gain over the previous No. 1."

The Earth Simulator was conceived when the former Science and Tech- nology Agency's Hajime Miyoshi, sometimes referred to as the Seymour Cray of Japan (after the founder of Cray Inc.), imagined a huge vector-type computer 10 years ago. The 1995 Great Hanshin Earthquake which devastated the Kobe area, and the Kyoto conference on global warming two years later motivated him and other researchers to design a supercomputer that could be used for earthquake and climate studies.

The Earth Simulator was conceived when the former Science and Tech- nology Agency's Hajime Miyoshi, sometimes referred to as the Seymour Cray of Japan (after the founder of Cray Inc.), imagined a huge vector-type computer 10 years ago. The 1995 Great Hanshin Earthquake which devastated the Kobe area, and the Kyoto conference on global warming two years later motivated him and other researchers to design a supercomputer that could be used for earthquake and climate studies.

His invention shocked the US industry, which had long dominated high-performance computing; before it appeared, all of the six fastest supercomputers were in the US.

His invention shocked the US industry, which had long dominated high-performance computing; before it appeared, all of the six fastest supercomputers were in the US.

Not only did the Earth Simulator show that Americans had fallen seriously behind in the speed race, but NEC's use of unfashionable vector technology, which had been superceded by scalar models, also inspired a renaissance in vector supercomputers, according to Meuer. The Cray X1, announced in November 2002 and funded by the US National Security Agency, is a vector machine that proponents hope will return Cray to the head of the pack. In addition, the Earth Simulator illustrates the importance of atmospheric and solid earth science -- at a time when most US supercomputers are being used by the Department of Defense to illustrate nuclear warhead explosions.

Not only did the Earth Simulator show that Americans had fallen seriously behind in the speed race, but NEC's use of unfashionable vector technology, which had been superceded by scalar models, also inspired a renaissance in vector supercomputers, according to Meuer. The Cray X1, announced in November 2002 and funded by the US National Security Agency, is a vector machine that proponents hope will return Cray to the head of the pack. In addition, the Earth Simulator illustrates the importance of atmospheric and solid earth science -- at a time when most US supercomputers are being used by the Department of Defense to illustrate nuclear warhead explosions.

Director General Sato must also keep an eye on his US peers. He knows the Earth Simulator's days as No. 1 are limited, citing IBM's Blue Gene/L, which will have a theoretical peak performance of 367 teraflops and will be part of the US National Nuclear Security Administration's Advanced Simulation and Computing Program.

Director General Sato must also keep an eye on his US peers. He knows the Earth Simulator's days as No. 1 are limited, citing IBM's Blue Gene/L, which will have a theoretical peak performance of 367 teraflops and will be part of the US National Nuclear Security Administration's Advanced Simulation and Computing Program.

Despite the government's muted interest in the Earth Simulator -- it has been visited by many foreign VIPs, but the highest official from Nagatacho was the education minister -- Sato remains hopeful.

Despite the government's muted interest in the Earth Simulator -- it has been visited by many foreign VIPs, but the highest official from Nagatacho was the education minister -- Sato remains hopeful.